Beyond Docker: Emulation, Orchestration, and Virtualization in Apple Silicon

Introduction

Over the last five years, I've delved deep into the world of microservices within big Kubernetes clusters. My typical workflow involves crafting solutions locally on my laptop, rigorously testing them, and subsequently committing my alterations to the master branch.

Docker had long been my go-to development environment. However, Docker's recent licensing shift urged me to scout for alternative setups. Moreover, I was on the hunt for a Kubernetes environment compatible with both ARM and x64 images.

My requirements were straightforward:

- A Docker (or a comparable tool) environment to run and coordinate single containers, complete with

docker-composecapabilities. - A Kubernetes framework tailored for complex deployments.

- This environment should be usable with Quarkus' dev services. Quarkus has a nifty feature that autonomously equips your Docker or Podman setup with essential tools based on your microservice's needs. For instance, should you integrate a Kafka dependency in Quarkus, it will fetch and operate a Kafka image via Docker or Podman during the development phase and calibrate your application's configuration for smooth communication.

- Simple to set up.

- It should not be Docker Desktop

In this article, I detail my exploration of different container and Kubernetes solutions, especially those considered as potential Docker alternatives for microservice development. While not exhaustive, this article offers a substantial overview. The primary aims are to:

- Clarify the underlying software components many of these tools employ.

- Offer insights into establishing a development environment on macOS, though much of this information also applies to Windows.

- Equip readers with foundational knowledge that allows for effective utilization and combination of different technologies to meet specific needs.

It's worth noting that many solutions discussed here can be combined for specific outcomes, even if they don't initially cover every desired feature. For instance, a combination like Minikube and Podman might be beneficial, although it's not the main focus of this post.

Concepts

I would like to first devote some time to examining some containerization concepts that will shed some light on the software stack that we use when setting up a container-enabled development environment.

Virtualizers & Container Runtimes

A virtualizer, more commonly referred to as "virtualization software" or "hypervisor," is a software layer or platform that allows multiple operating systems to run concurrently on a single physical machine. It creates virtual machines (VMs), each of which acts like a distinct physical device, capable of running its own operating system and applications independently

A container runtime is a software responsible for running and managing containers, which are lightweight, isolated units designed to execute applications and their dependencies consistently across various environments. The runtime handles tasks such as pulling container images, creating, starting, and monitoring containers, ensuring they remain isolated from other processes, and providing them with the necessary resources. Popular container runtimes include Docker, containerd, and runc.

Based on the above, the architectures that a container runtime can run are dependent on the architectures supported by the underlying hypervisor.

This means that whether your Docker (or Podman or anything else) environment supports both x64 and ARM architectures is dependent on whether the hypervisor supports those architectures.

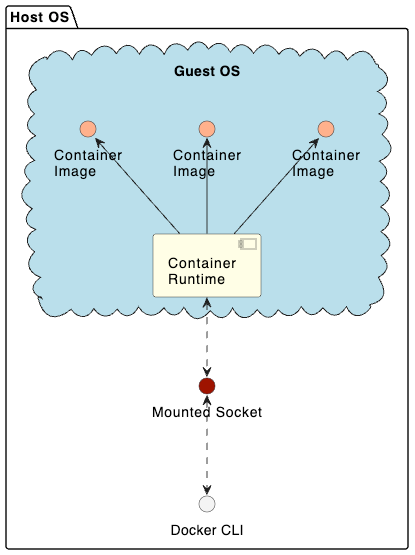

When running on Linux, Docker leverages native OS features such as namespacing and cgroups to facilitate containerization. As a result, there's no need for a VM on Linux. However, when it comes to macOS and Windows, the situation changes, and virtualization becomes necessary. Typically, the following elements are entailed on these platforms:

- A virtualization mechanism for initializing a VM (like hyperkit, QEmu, etc.)

- An internal VM installation of the container runtime

- Integration of the file system to ensure host-to-guest folder mounting

Depending on your chosen tools, there might be a decision to mount the container runtime socket directly on your host. As an illustration, Docker directs the docker.sock socket to your system, enabling the CLI on your host to interface with Docker housed within the Virtual Machine.

Docker Εcosystem

When we are talking about Docker, we usually talk about the following tools:

- Docker runtime. Uses

containerdfor its low-level container management. - Docker CLI. It's a command-line tool to allow interaction with Docker daemons. On macOS, you can install the

docker,docker-composeand setup auto-completion for those using Homebrew:brew install docker. Note that this will install only the CLI tool. It will not install the docker engine, neither the Docker Desktop. - Docker Desktop. It's a full package containing the Docker runtime, the Docker CLI, and a default Docker runtime all in one package. Also provides an optional Kubernetes installation that is installed via the Docker runtime, and also a Desktop application that has a GUI for changing Docker engine parameters. If you download and install the full Docker Desktop dmg from the website, you will actually install the entire Docker stack: The

dockercommand-line tool, the Docker container engine, and the Docker desktop application itself. containerd- is a core container runtime responsible for managing the entire lifecycle of containers on its host system. It supports functionalities such as pulling, pushing, and managing images. Originally,containerdwas an integral component of Docker and was developed by Docker, Inc. However, in subsequent developments, it was decoupled from Docker and now stands as an independent project. Despite this separation, Docker continues to rely oncontainerdas its foundational runtime layer.

Apple's Virtualization Framework & Rosetta

Rosetta is a translation layer that allows apps built for Intel-based Mac computers to run on Apple silicon Macs. The Apple virtualization framework is a set of APIs that allow developers to create and manage virtual machines (VMs) on Apple silicon and Intel-based Mac computers. The virtualization framework and Rosetta work together to provide a powerful way to run Intel-based apps on Apple silicon Macs. In the context of containerization, Rosetta and AVF work together to allow running x64 containers. Not all containerization solutions support using Apple's solution. In fact, most support QEmu for virtualization, with Apple's framework only being relatively lately supported.

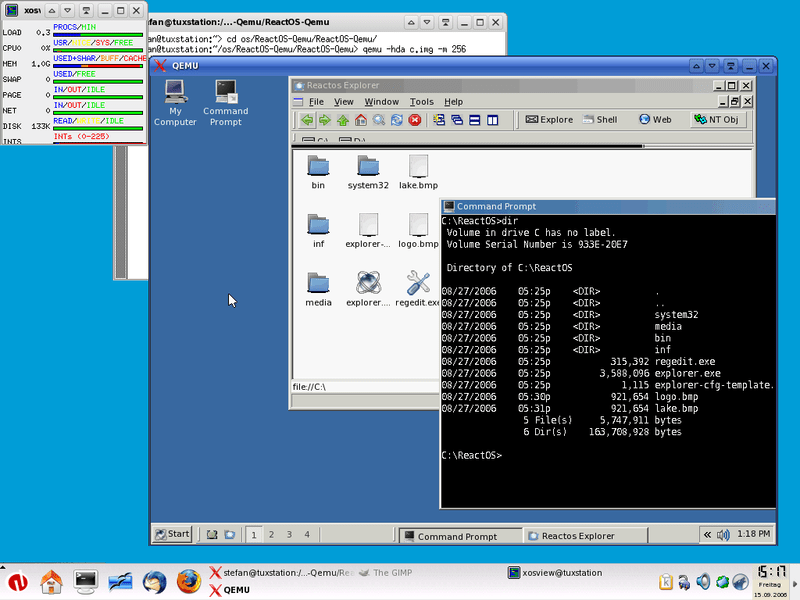

QEMU

QEMU is a generic and open source machine emulator and virtualizer. It can be used to run operating systems and applications on different hardware platforms. QEMU is often used by tools for containerization to create lightweight virtual machines that can be used to run containers.

Another way that QEMU is used by tools for containerization is to create ephemeral containers. Ephemeral containers are containers that are created and destroyed quickly. QEMU can be used to create ephemeral containers that are based on different operating systems and architectures.

In the context of containerization, QEMU is used by many tools to create virtual machines able to run both Arm and x64 images interoperably.

Docker & x64 containers on Apple Silicon

Wait, doesn't that come out of the box?

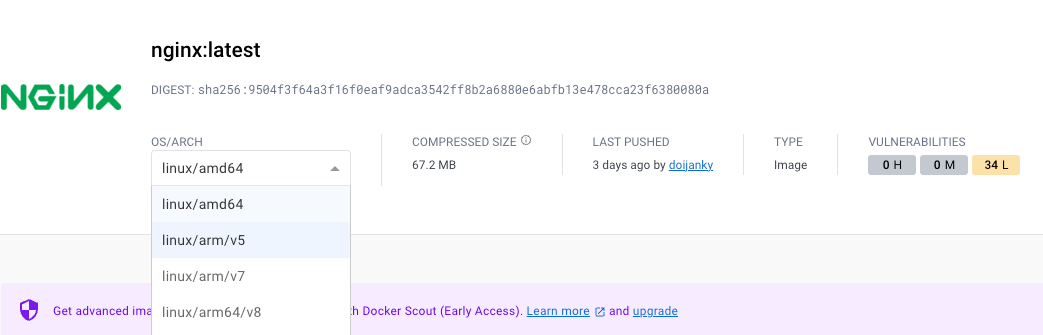

Firstly, it's essential to clarify that, strictly speaking, there aren't "multi-arch images". In the Docker ecosystem, however, images often mimic this behavior. When you build and push an image with Docker, it defaults to the architecture of your machine. Consequently, if your system supports a different architecture than that of the image, attempting to run the image will likely result in errors, such as the "exec format error."

Docker multi-arch images consist of multiple distinct images for the same application, each tailored to a specific architecture. These images are unified under a single manifest, which correlates their metadata with the corresponding architecture. Docker has a good explanation of the concept of multi-architecture images in the following link: https://www.docker.com/blog/multi-arch-build-and-images-the-simple-way/

Recent Docker iterations have integrated support for Apple's virtualization framework and the Rosetta emulation. This integration empowers Docker to accommodate both Arm and Intel architecture images, though Intel images are managed via QEmu emulation. As one might expect, using emulation for Intel images introduces some performance overhead.

When executing docker pull in your terminal, Docker endeavors to retrieve an image compatible with your machine's architecture. If no match is found, Docker will opt for an AMD64 image and lean on Rosetta for execution.

Notably, numerous projects on Docker Hub offer "multi-arch images". As an illustration, the NGINX Docker page outlines supported architectures.

On Docker Desktop for macOS, this adaptability extends to Kubernetes. By enabling Kubernetes within Docker Desktop, you essentially instruct Kubernetes to operate within your Docker Runtime environment. This implies Kubernetes will utilize the same container runtime and virtual machine as Docker. Given that the container runtime also taps into Apple's Rosetta, Kubernetes inherits the ability to run x64 containers through emulation, leveraging functionalities from the Docker runtime.

Tools

Now that we've introduced several foundational concepts, let's explore tools that leverage these technologies. While this isn't an exhaustive list, it offers insights into alternatives to Docker for developing microservices, whether you're aiming to run individual containers or complete microservice stacks.

Plain Minikube

Minikube can also be used as a standalone component. And it seems that it supports different kinds of drivers. If we exclude Docker, the rest that remain as viable options are QEmu and Hyperkit

After brew install minikube

Hyperkit seems to not be supported in my machine.

➜ ~ minikube start --driver=hyperkit

W0910 16:57:02.238664 4768 main.go:291] Unable to resolve the current Docker CLI context "default": context "default": context not found: open /Users/csotiriou/.docker/contexts/meta/37a8eec1ce19687d132fe29051dca629d164e2c4958ba141d5f4133a33f0688f/meta.json: no such file or directory

😄 minikube v1.31.2 on Darwin 13.5.1 (arm64)

✨ Using the hyperkit driver based on user configuration

❌ Exiting due to DRV_UNSUPPORTED_OS: The driver 'hyperkit' is not supported on darwin/arm64When using QEmu with a strictly AMD64 container, I get the following error:

exec /usr/sbin/nginx: exec format error

Stream closed EOF for default/my-deployment-698dfbdfb7-wkcm2 (my-container)meaning that our Minikube installation using QEmu is not able to emulate i386 containers at present. Plus, this method doesn't solve the issue of running plain docker and docker-compose without installing them, but I thought I could give it a shot.

Minikube offers a podman driver which seems promising since Podman is able to emulate and use x64 images - but at the time of this writing, it's still in the experimental phase and I didn't have the chance to try it.

Microk8s

I have to admit that the combination of multipass / microk8s is a very enticing stack. Adding new VMs is a breeze, and setting up a new Kubernetes cluster is a matter of running one command.

Microk8s uses Multipass underneath. Multipass installs and runs a standard Ubuntu machine inside. Then it installs microk8s in the guest machine and performs the necessary configuration to connect everything to the host machine.

The synergy of Multipass and MicroK8s presents a compelling tech stack for container orchestration. Launching new VMs is impressively straightforward, and initializing a fresh Kubernetes cluster is as simple as executing a single command.

Under the hood, MicroK8s leverages Multipass. What Multipass does is spin up and operate a standard Ubuntu machine. Inside this VM, it then installs MicroK8s and carries out the essential configurations to bridge the guest machine with the host.

While the toolset is undeniably robust, there's a caveat: I struggled to execute x64 images within MicroK8s, despite Multipass's substantial reliance on QEmu for macOS compatibility. Additionally, much like the plain Minikube solution, it falls short in integrating a seamless Docker instance on the host. Given these challenges, I opted to explore alternative solutions.

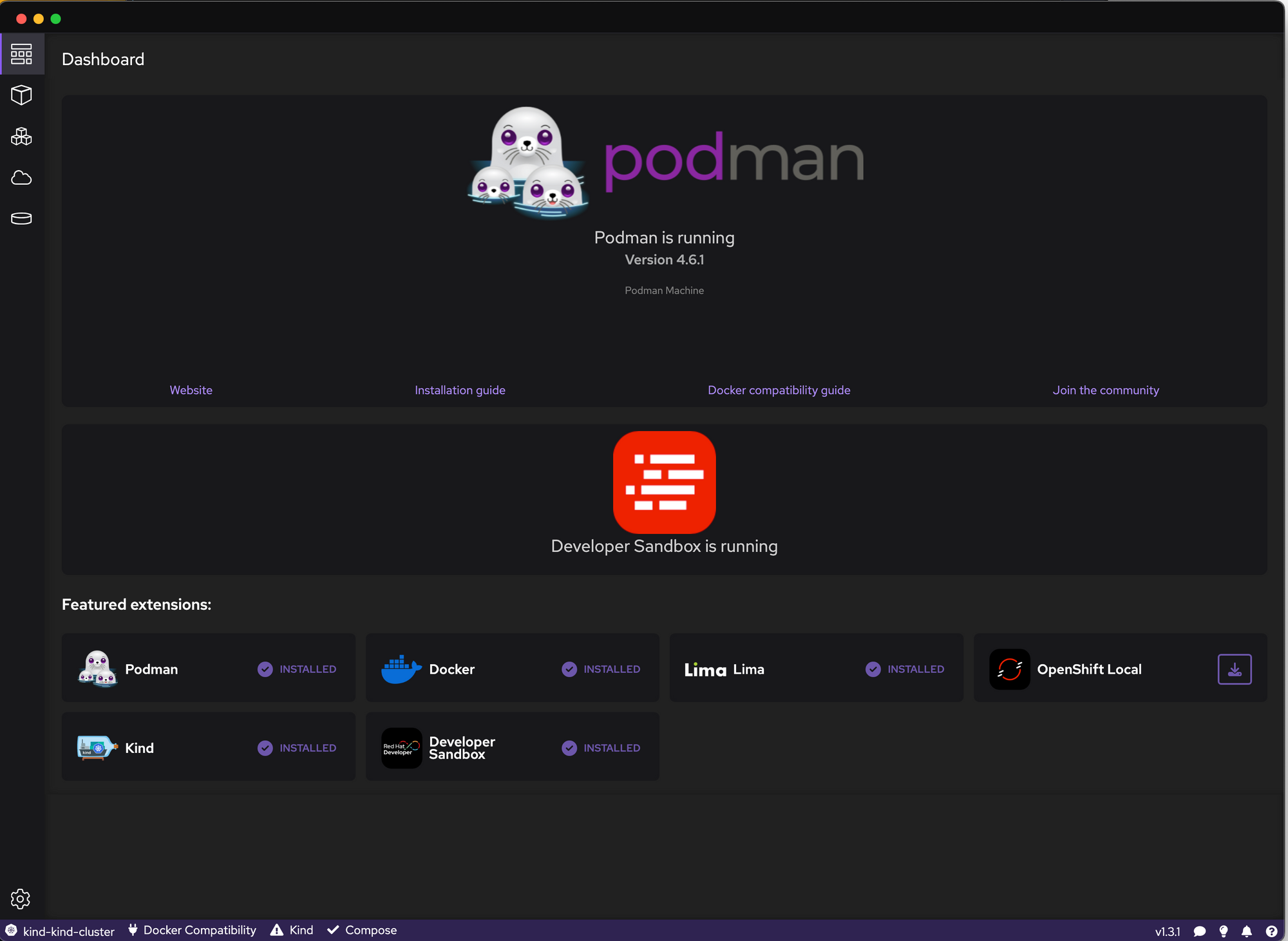

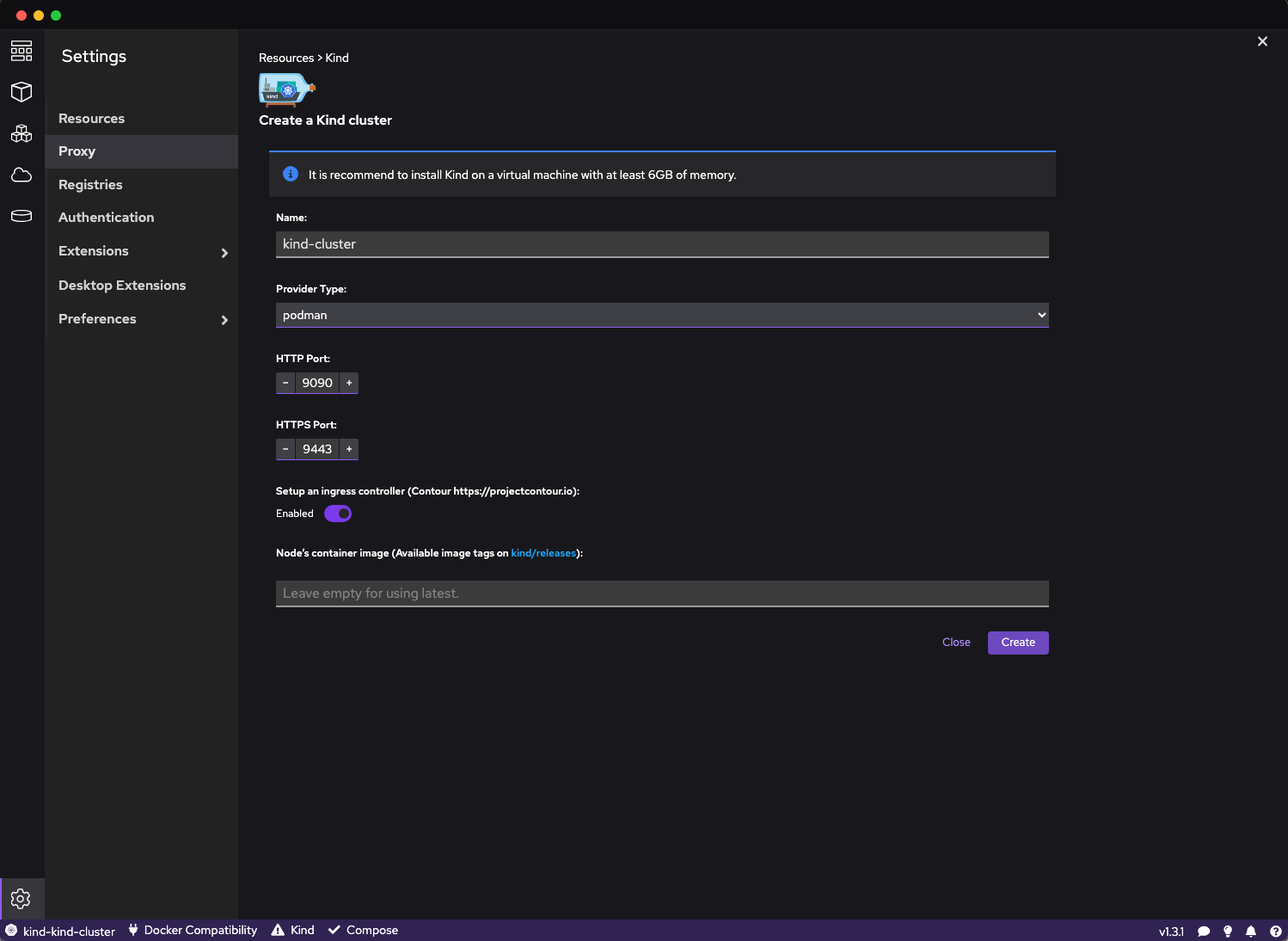

Podman + KIND

Podman is a Docker-compatible tool for orchestrating and managing containers. When Docker unveiled their licensing shift some time ago, Podman was my first consideration as a potential alternative. However, in its infant stage, I faced numerous challenges, predominantly related to folder mounting and networking.

Fast forward to now, Podman has significantly evolved. Having used it intensively over the past few months, I was pleasantly surprised by its functionality. Moreover, there's a Podman Desktop application that further simplifies the orchestration and deployment of both pods and individual containers.

Underneath, Podman leverages QEmu. The Quarkus team has crafted an insightful tutorial on operating Podman on Apple Silicon devices. The reliance on QEmu is evident in Podman's architecture. This is beneficial as QEmu specializes in emulating diverse architectures, enabling Podman to effortlessly support x64 images. While QEmu's x64 emulation might not match the speed of Apple's Rosetta 2, facilitated via the Virtualization Framework, it suffices for typical use cases.

QEmu's emulation of x64 is not as fast as Apple's Rosetta 2 provided via Virtualization Framework, but it's more than enough for our use case. Since Podman supports running both ARM and x64 containers, we can install KIND - Kubernetes In Docker inside it, and take advantage of its advanced emulation to do what we want.

For me, the combination of Podman and KIND has been a success, ticking all my requirements.

- It replicates the Docker commands I'm accustomed to.

- Enables Kubernetes operation on the same device without the need for an additional VM, optimizing RAM usage.

- Supports both ARM and x64 Containers, be it through the Docker (or Podman) command or within Kubernetes via KIND.

- As a bonus, Quarkus' dev services, backed by the Dev Services libraries, can automatically detect and utilize both Docker and Podman environments.

Note: I rely heavily on Docker Compose. Although rumors suggest that Podman might not integrate seamlessly with docker-compose, my experience with Podman and docker-compose v2.x contradicts this. However, it's crucial to understand that while Podman is frequently touted as a direct Docker substitute, several nuances differentiate the two. For a more in-depth comparison, I recommend this article.

Limactl & Colima

Lima is a commendable virtual machine orchestrator.

Lima stands for Linux Machines, and it is an extremely versatile tool for setting up Linux Machines on macOS (and Linux). It is frequently paired with nerdctl, which is a Docker-compatible CLI for for containerd. Remember, Docker is built atop of containerd as well. The reason for this versatility is the huge combination of virtualizations and container engines it supports.

Of particular interest for me was the "Fast Mode 2, Rosetta" virtualization described here which allowed me to set up a machine with k3s and containerd, using Rosetta 2 emulation. The following is my setup yaml file.

vmType: "vz"

rosetta:

# Enable Rosetta for Linux.

# Hint: try `softwareupdate --install-rosetta` if Lima gets stuck at `Installing rosetta...`

enabled: true

# Register rosetta to /proc/sys/fs/binfmt_misc

binfmt: true

images:

# Try to use release-yyyyMMdd image if available. Note that release-yyyyMMdd will be removed after several months.

- location: "https://cloud-images.ubuntu.com/releases/22.04/release-20230729/ubuntu-22.04-server-cloudimg-amd64.img"

arch: "x86_64"

digest: "sha256:d5b419272e01cd69bfc15cbbbc5700d2196242478a54b9f19746da3a1269b7c8"

- location: "https://cloud-images.ubuntu.com/releases/22.04/release-20230729/ubuntu-22.04-server-cloudimg-arm64.img"

arch: "aarch64"

digest: "sha256:5ecab49ff44f8e44954752bc9ef4157584b7bdc9e24f06031e777f60860a9d17"

# Fallback to the latest release image.

# Hint: run `limactl prune` to invalidate the cache

- location: "https://cloud-images.ubuntu.com/releases/22.04/release/ubuntu-22.04-server-cloudimg-amd64.img"

arch: "x86_64"

- location: "https://cloud-images.ubuntu.com/releases/22.04/release/ubuntu-22.04-server-cloudimg-arm64.img"

arch: "aarch64"

mounts: []

containerd:

system: false

user: false

provision:

- mode: system

script: |

#!/bin/sh

if [ ! -d /var/lib/rancher/k3s ]; then

curl -sfL https://get.k3s.io | sh -s - --disable=traefik

fi

probes:

- script: |

#!/bin/bash

set -eux -o pipefail

if ! timeout 30s bash -c "until test -f /etc/rancher/k3s/k3s.yaml; do sleep 3; done"; then

echo >&2 "k3s is not running yet"

exit 1

fi

hint: |

The k3s kubeconfig file has not yet been created.

Run "limactl shell k3s sudo journalctl -u k3s" to check the log.

If that is still empty, check the bottom of the log at "/var/log/cloud-init-output.log".

copyToHost:

- guest: "/etc/rancher/k3s/k3s.yaml"

host: "{{.Dir}}/copied-from-guest/kubeconfig.yaml"

message: |

To run `kubectl` on the host (assumes kubectl is installed), run the following commands:

------

export KUBECONFIG="{{.Dir}}/copied-from-guest/kubeconfig.yaml"

kubectl ...

------

That way, I had a Kubernetes able to run both ARM and x64 containers seamlessly. However, Lima and nerdctl is a somewhat low-level framework. Although it does the job great, some tools I use including Quarkus (with its Dev Services) do not automatically make use of it.

With Lima, I successfully set up Kubernetes to run both ARM and x64 containers without a hitch. However, Lima and nerdctl operate at a somewhat lower level. While they perform admirably, certain tools I utilize, such as Quarkus (especially its Dev Services), don't natively integrate with it, since they don't know where to find the Docker Sockets, and other things necessary to establish a complete Docker environment on the host. As a result, more manual labor is needed to set everything up if one wants to strictly stay with Lima.

This is where Colima comes in.

Colima is essentially a refined layer built atop Lima, serving as an enhanced wrapper. While it retains the versatility of Lima, Colima further simplifies the process of setting up Linux container machines and emphasizes deeper integration with the macOS system.

For instance, the command I employ to initiate a new Colima installation is:

colima start --kubernetes --arch aarch64 --vm-type=vz --vz-rosettaExecuting this command will install the following:

- A virtual machine with docker and

containerdinside. The Virtual machine will use Lima and is based on a very thin Alpine image. - A Kubernetes (k3s) that uses the said

dockerruntime- This instance is able to run x64 images

- It is using Rosetta instead of QEmu for x64 virtualization, therefore making it very fast when emulating

- A mounted socket on the host in the location

~/.colima/default/docker.sockso that it can be used with other docker runtimes

If you want to use this particular host with the docker CLI you can use docker context use <<colima context name>> on your host machine to switch between docker-compatible engines.

If you use Quarkus dev services like me, you can set the DOCKER_HOST environment variable when running Quarkus Dev Services.

For example:

export DOCKER_HOST="unix://${HOME}/.colima/default/docker.sock" mvn compile quarkus devYou can put the following line in your .bashrc or .zshrc so that the Colima docker socket is always used

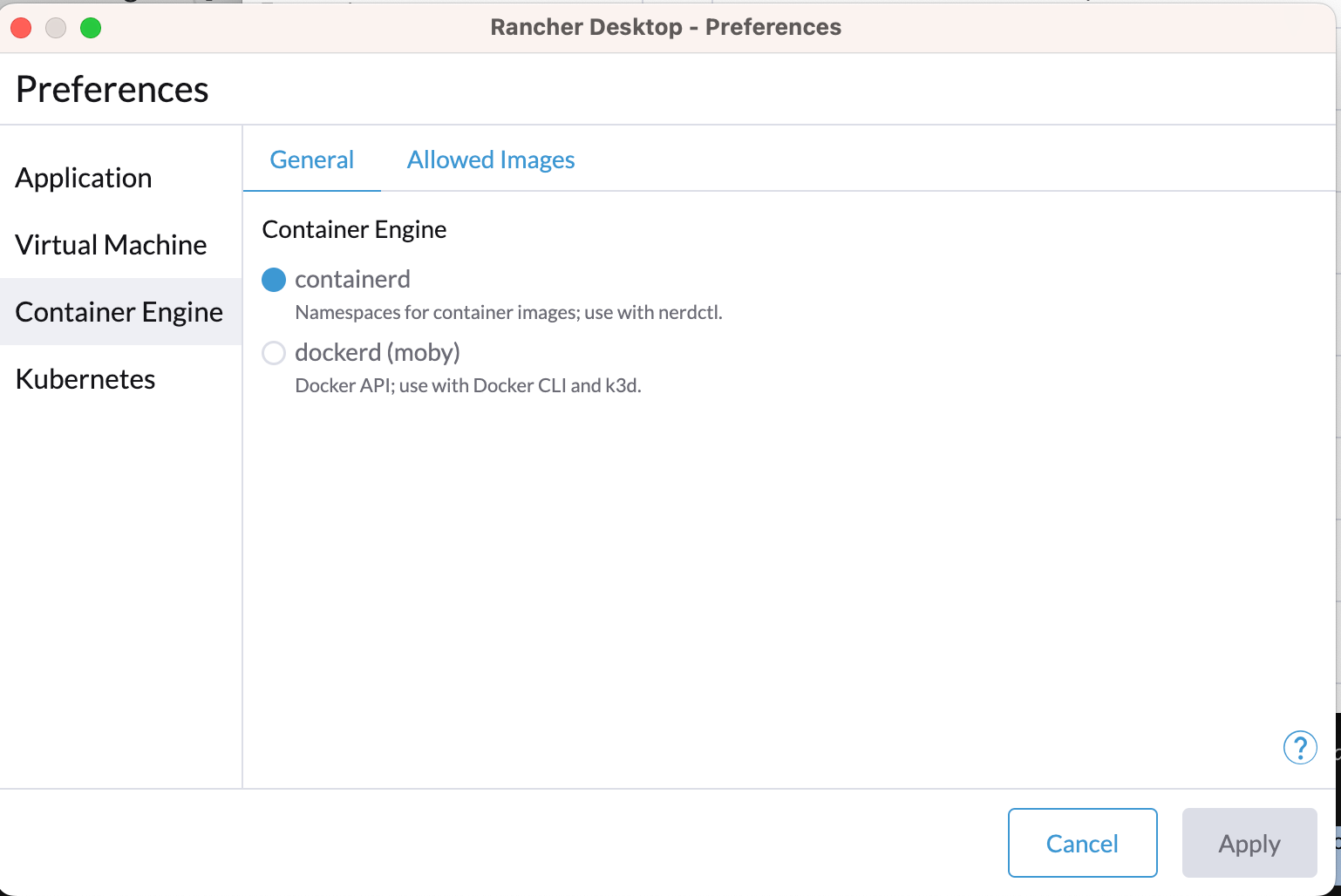

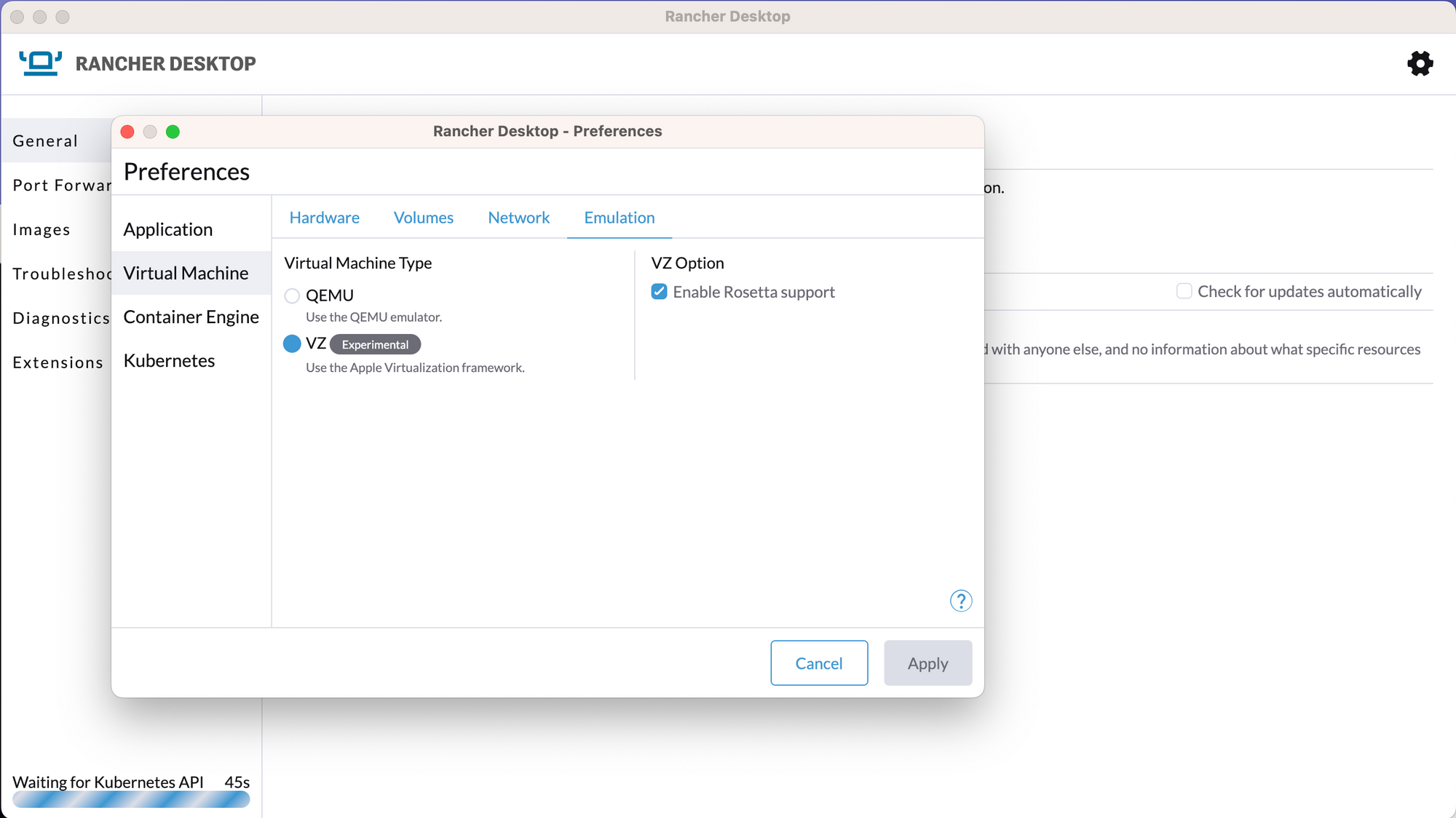

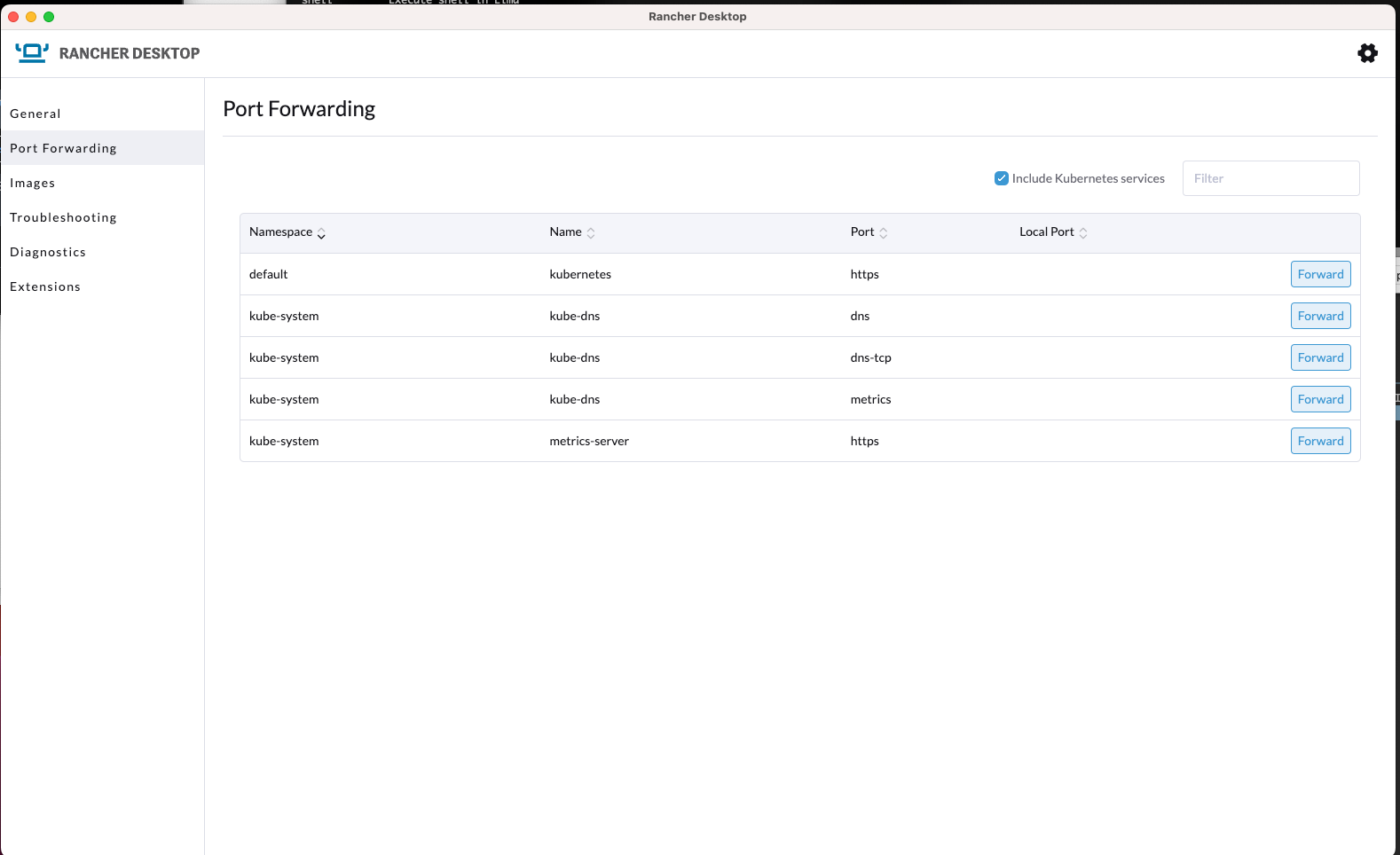

export DOCKER_HOST="unix://${HOME}/.colima/default/docker.sock"Rancher Desktop

Rancher Desktop operates with Lima at its core. On its initial launch, a directory is established at ~/.rd/bin where aliases to docker executables are stored, directing to the app's contained binaries:

Rancher Desktop.app/Contents/Resources/resources/darwin/binThe suite of command-line tools provided includes rdctl (to interface with Rancher), and familiar utilities like docker, docker-compose, nerdctl, and docker-buildx.

Interestingly, even if you decide to relocate the "Rancher Desktop.app" post-initial launch, the application ensures everything remains functional by smartly updating the paths each time it

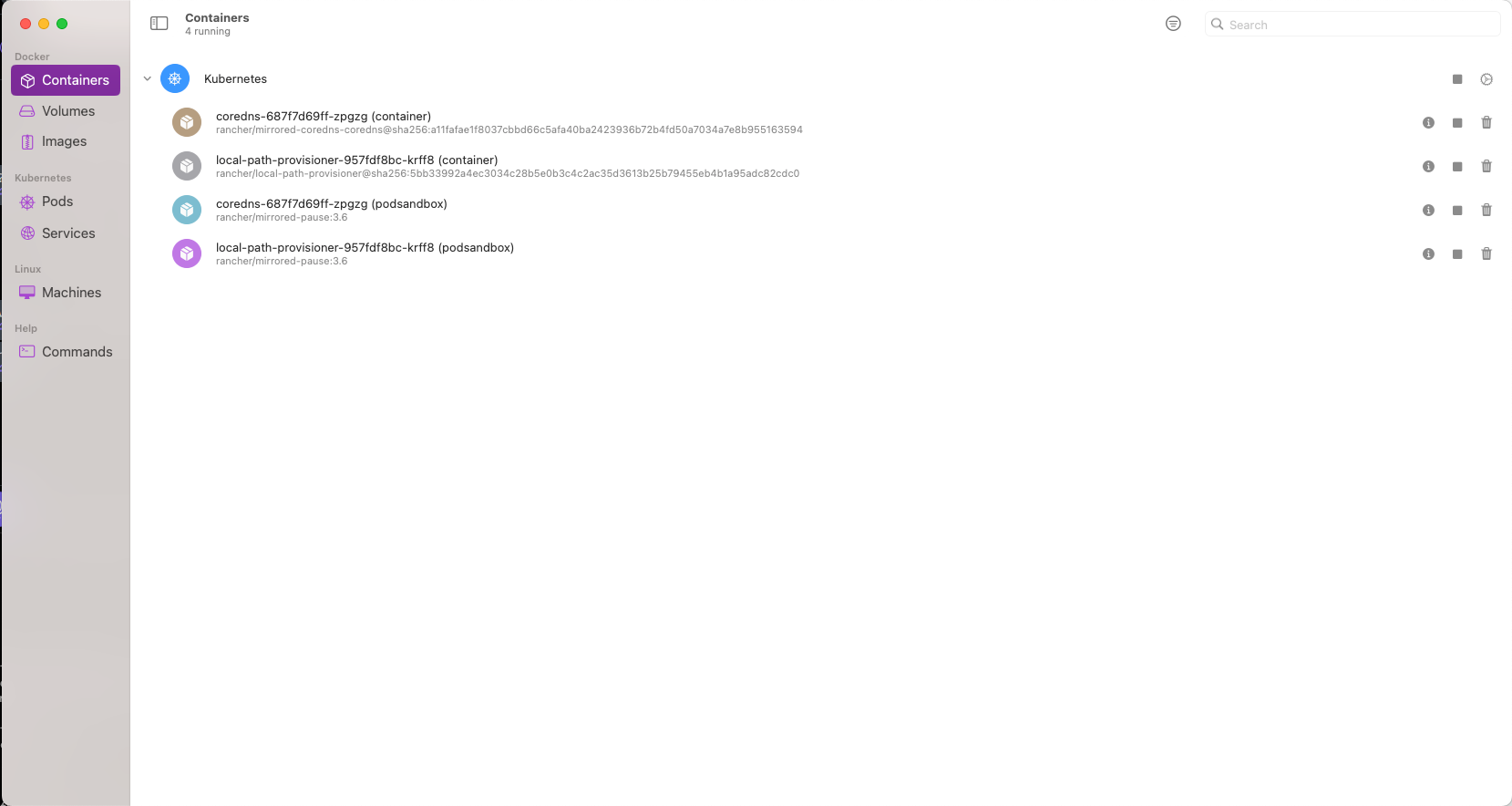

Upon running Rancher Desktop, the following stacks are performed:

- A Linux VM is created

- Docker CLI is installed inside the Docker Machine

- A version of K3s is installed inside the Guest OS

- Your

~/.kube/configfile is updated with therancher-desktopcontext to allow you to interact with the embedded k3d - Depending on your configuration, the following happens

- If you have Docker as your container engine set in the Rancher Settings, then the Docker Daemon in the guest machine will run, and the socker will be mounted in your host OS. K3s will be ran with the

--dockeroption, thereby running Kubernetes using the daemon host. - If you have

containerdas your Container Engine set in the Rancher Settings, the docker daemon will not be installed, and k3s will boot using only the containerd runtime.

- If you have Docker as your container engine set in the Rancher Settings, then the Docker Daemon in the guest machine will run, and the socker will be mounted in your host OS. K3s will be ran with the

Rancher also provides Apple Virtualization Framework support with Rozetta, which enables faster x64 emulation. QEmu also works fine. One can use x64 images with both options enabled.

Being built on Lima, Rancher Desktop enjoys several inherent benefits:

- Experimental support for Rosetta emulation on macOS ARM. In my experience, it has performed reliably.

- The macOS file system integrates seamlessly within the virtual machine, giving an impression of native belongingness to the VM's file system.

- Images shared between the HOST and GUEST seem to originate from a singular registry.

- Installation is straightforward – a mere drag-and-drop suffices.

- Very good customization options regarding container engines, emulation options, etc

Rancher Desktop, built entirely on open-source components such as Moby, containerd, and K3s, offers an excellent solution for anyone wanting to create a local Kubernetes environment for development purposes. I've relied on K3s in production due to its reliability. What sets Rancher Desktop apart is its smooth incorporation of K3s. It combines the power of a comprehensive Kubernetes system with low resource consumption, while still ensuring optimal performance. The added advantage? K3s's significant popularity guarantees more straightforward community assistance, setting it apart from alternative solutions.

While K3s can be installed with various products, Rancher Desktop offers it in a streamlined, out-of-the-box package, ensuring you have everything you need right from the start.

Using Rancher Desktop with Quarkus's dev services involves mounting exposing the mounted socket in the DOCKER_HOST variable, as done with the rest of the options discussed here.

export DOCKER_HOST=unix:///Users/<<username>>/.rd/docker.sockUse docker context list to find out where the actual socket is mounted so that you can adapt your calls accordingly.

I found Rancher Desktop to be the solution that solves all my problems in a simple manner, while at the same time being very simple to operate. This is currently my go-to solution.

Bonus, Mac-Only solution: OrbStack

OrbStack is fairly new in the container management arena, distinctively standing apart from its counterparts. Its capabilities expand, and it's currently comparable to Rancher Desktop (although not as feature-rich yet, when it comes to low-level functionalities and the versatility that limactl offers). To offer a perspective on its competencies, I'll draw parallels with Rancher Desktop — not only because of the robust tools it employs but also due to the similarities in their feature sets when it comes to microservices developers (Docker & Kubernetes). Notably, Orbstack has newly incorporated support to run Kubernetes within a virtual machine.

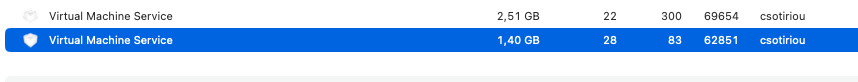

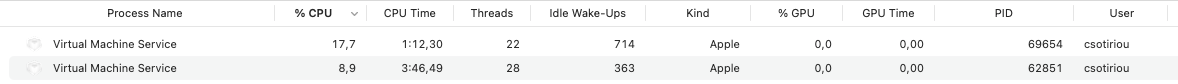

A remarkable attribute of Orbstack is its minimalistic resource footprint. From my experiments with creating Kubernetes deployments, it's proven to be efficient and reliable. The platform manages Docker and Kubernetes containers within the same virtual machine stack.

One aspect that distinguishes Orbstack from its competitors is its close integration with macOS, making it remarkably lightweight. For my needs, it's as feature-rich as Rancher Desktop, but it also stands out in terms of performance and efficiency.

On my machine, booting up the Orbstack with Kubernetes takes a mere 2 seconds. Both CPU and RAM usage are minimal. Orbstack starts with a conservative allocation of resources and then dynamically allocates more RAM based on the workload. Interestingly, my experience with Lima mirrors this behavior, but there are subtle differences worth noting.

It appears that, in a basic Kubernetes setup without any applications running, Orbstack consistently consumes fewer resources than its counterparts.

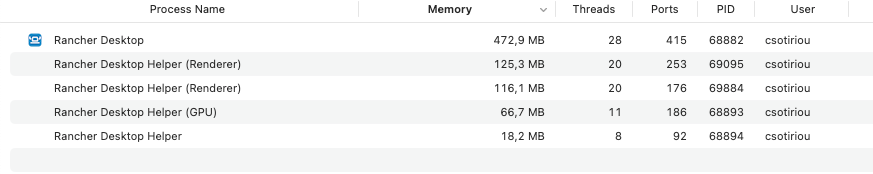

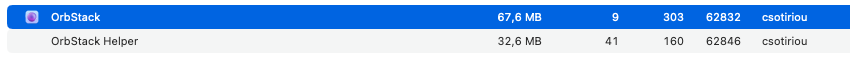

My other observation concerns the UI performance compared to that of Rancher Desktop. Rancher Desktop appears to be developed using Electron or a similar framework. The application consumes roughly 800 Mbytes on my machine, in contrast to Orbstack, which uses just about 100 Mbytes. Consequently, the RAM consumption gap is even larger, making menu navigation in Orbstack feel swift and seamless, unlike the somewhat sluggish experience in Rancher Desktop.

Orbstack is mounting the Docker socket as Rancher desktop does, and you can use docker context list to find out where it's mounted for use with your other development stacks.

At the time of this writing, I identified three potential drawbacks of Orbstack when compared with Rancher Desktop:

- Pricing Model: Orbstack is set to transition into a paid product for commercial use. EDIT: Pricing here: https://orbstack.dev/pricing. Contrastingly, Rancher Desktop remains steadfast in its commitment to being free. While this pricing decision might seem like a barrier, it's essential to weigh in on the product's value. Orbstack's asking price seems justified, considering its unparalleled macOS integration and the corresponding development effort.

- Default absence of a usable Linux Machine: Another difference lies in the non-existence of an underlying Linux machine when simply operating Docker and Kubernetes on Orbstack. Although one can install and run a Linux machine via Orb (still ensuring minimal resource consumption), it operates as a distinct VM, separate from the primary Docker / Kubernetes environment. As a result, direct access to the Docker CLI is missing unless installed within this virtual machine. While this didn't pose an issue for me, it's an aspect potential users might want to deliberate upon.

- Customization Capabilities: Orbstack seems a tad restrictive in terms of customization when contrasted with Rancher Desktop, especially in light of the foundational tools the latter employs. With LiMa and Rancher Desktop, users have a wider array of choices: from deciding on virtualization types for the VM to switching between container engines. Orbstack appears to pre-emptively streamline these selections, simplifying the stack setup process, albeit at the expense of customization. Therefore, for those seeking granular control over their Kubernetes installation, desiring to test applications across diverse container engines, or aiming for a fully flexible Docker and Kubernetes setup to test many different versions of their tools/infrastructure, Orbstack might not hit the mark.

In sum, while Orbstack presents several advantages, these potential limitations warrant consideration for making an informed choice. For the majority of macOS users wanting a simple and seamless K8s and Docker setup, OrbStack's pricing model will pay off immediately. For more customization capabilities, check out the rest of the options discussed here.

Conclusion

This piece has expanded considerably beyond my initial intention. It's astounding to consider that, despite its length, I've merely scratched the surface of the tools discussed. Each method highlighted comes with its unique advantages and limitations, all dependent on individual requirements.

That said, it's truly gratifying to witness the significant strides since the debut of Apple Silicon. Today, we're privy to an array of robust development options. It's heartening to realize that we've reached a point where the ecosystem for microservices development is both mature and efficient.

Additional Reading

- QEmu - https://www.qemu.org

- containerd - the most popular container runtime

- Docker vs containerd: Container Runtimes Compared: a very good article explaining both concepts

- Apple's Virtualization Framework