From Hype to Reality: What AI Means for the Future of Software Engineering

GenAI enters the scene...

In December 2022, ChatGPT burst onto the scene, sparking curiosity and concern. Articles speculated on how GenAI could disrupt roles once thought uniquely human—writing, journalism, even software engineering. As someone in software engineering, this felt personal, almost like my relevance was being questioned.

Fast forward to early 2024. I was reviewing a pull request for a key microservice update with a developer—let’s call him Jake. While going over his code, Jake casually mentioned, “I use Copilot and ChatGPT. You?” I admitted I used them too—great tools for speeding up routine tasks or learning new things. Then he joked, “Won’t be long before GenAI takes over and we’re out of jobs.”

But as we delved deeper into the system, something interesting happened. We realized we could skip a heavy chunk of the logic entirely by rethinking the upstream design. After a couple of hours, we had a new plan that cut unnecessary I/O by 15% and drastically simplified the system. When the changes went live, the results blew us away—50% less resource consumption, fewer replicas, and a happier database team.

This moment reinforced something important—GenAI, for all its power, cannot replace human ingenuity and problem-solving.

Fears of Software Developers becoming obsolete are not totally without merit - and it's true that the Software landscape is being transformed at a very rapid pace. However I believe the real concern is not AI itself, but the professional society's reaction to it. In this article, I'll explore how GenAI works, its impact on software engineering, and why critical thinking and creativity remain irreplaceable.

To understand why GenAI (and AI in general) cannot replace human ingenuity, it's essential to know how GenAI models work and where their limitations lie. Since the most modern disruption came with GenAI, I will use this as a test case, but my conclusions will revolve around AI in general.

Let's dive into how GenAI models function.

Textual GenAI: A Simple Idea bumped to 100

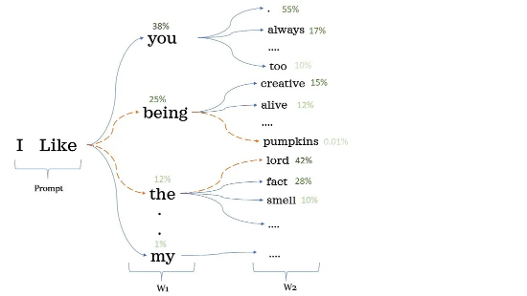

Generative AI is powered by LLMs (Large Language Models), which use deep learning techniques like transformer-based neural networks. At their core, these models predict the next word or token in a sequence based on context.

The model uses vast datasets and optimization techniques to learn patterns in language—grammar, semantics, and even basic facts about the world. When generating text, LLMs process input by converting words into numerical representations and applying attention mechanisms to determine relevance.

At its core, an LLM is built on a simple idea: predict the next word (or token) based on the words that came before it. This basic concept is amplified "to 11" by scaling it with massive datasets, billions (or even trillions) of parameters, and advanced architectures like transformers

Therefore, LLMs are statistical tools. They excel at predicting patterns but lack true understanding or reasoning. In software engineering, they assist by generating code snippets based on examples, but their output requires human oversight for accuracy and integration.

In essence, GenAI doesn’t reason; it mimics reasoning. This distinction between GenAI (and AI in general) and humans is crucial to understanding why many fears about AI tools are misplaced. Let's examine some implications of this misunderstanding and how society has responded to the introduction of GenAI.

Looking at GenAI from the wrong angle

The above information about the real nature of GenAI seemed to elude many people, even today. Many professionals in the industry today think that AI will replace all human productivity interactions. These individuals are not confined to software engineering; they can be found in roles like CEOs, HR leaders, or tech investors, all exploring how AI fits into their workflows.

However, we are looking this from the wrong angle, and that can be easily proven by the mass workplace hysteria that is plaguing the world, fueled in part by the workplace changes that COVID19 forced us to implement. Since 2022, many rushed and poorly thought-out corporate decisions were made, driven by Fear of Missing Out, corporate greed, and a lack of understanding—not just about GenAI and AI but also about the true capabilities of the human mind (a topic I plan to explore in a future blog post).

These rushed decisions are examples of the pitfalls of adopting new technologies without prior careful consideration.

(Too) Early GenAI adoption

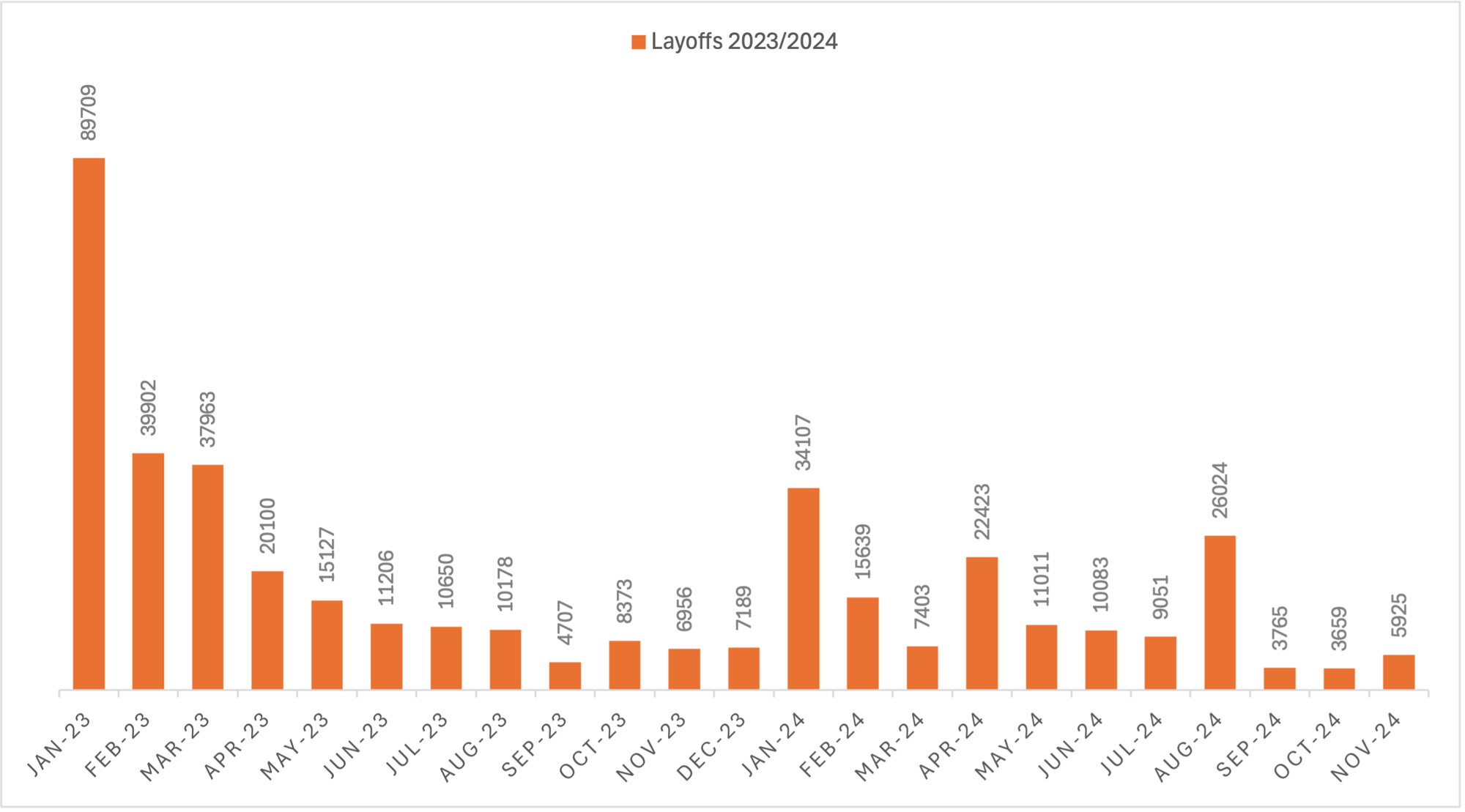

Let's consider a pivotal moment as an example of entering a mass corporate hysteria: OpenAI suddenly makes an announcement about ChatGPT, and Google announces the lay-off of 12.000 employees after one month, shifting their focus on AI.

Many companies followed suit, laying off employees they believed unsuitable for an AI-driven world while hiring AI specialists. By April 2024, it appears strategies are shifting as businesses realize adopting AI demands a significant paradigm shift, and the process is far from straightforward. The infrastructure required is massive, the energy consumption is substantial, and most people attempting to use AI effectively need retraining. Some companies are now rehiring their former staff after realizing they jumped on the AI bandwagon prematurely.

In short, the hype has cooled. Companies that control AI tech have emerged as clear winners, but for others, the returns on investment are questionable when factoring in the costs—energy, implementation, and the sheer effort to clean and prep data for AI platforms. The corporate world now faces disillusionment, moving beyond the Peak of Inflated Expectations toward more pragmatic approaches. (Gartner: The Hype Cycle of AI)

Whether the hype was justified or not, articles linking AI to mass layoffs and shifting software priorities are widespread, often placing the blame on AI for the layoffs that began in early 2023. However, this narrative overlooks a major factor: the industry's mismanagement of the new realities brought on by COVID-19.

When AI meets COVID (workplace edition)

A significant portion of recent tech layoffs stemmed from over-hiring during the COVID-19 pandemic rather than solely the rise of generative AI. The pandemic's acceleration of digitized services led businesses to hire aggressively in areas like e-commerce and remote work technologies, anticipating sustained growth. However, as demand normalized and economic pressures such as inflation and rising interest rates increased, companies were forced to reassess and cut costs, resulting in layoffs

Looking at trends from the past five years is valuable, but going further back to historical pivot points offers more insights into how software development has evolved over the decades. GenAI has indeed sparked valid speculation about the future of software and fears of developers becoming obsolete. However, history shows that similar concerns arose with other technologies, only to result in new jobs and opportunities in the software sector. Let’s explore how these patterns repeat with each technological leap.

History repeats itself (?)

Software engineering has been around for decades. Its evolution has sparked fears of its obsolescence many times, where software engineering was thought to be a dying art where a few select would perform for the fun of it, while at the same time they wouldn't be able to get a decent job with a salary able to pay their bills. Let's take a look back.

- High-Level Programming Languages (1950s-1960s): When high-level programming languages like FORTRAN and COBOL came in to play, they were thought to predict the elimination of low-level coding.

- Later, Object Oriented Programming enabled the creation of reusable code libraries, which were thought eliminate critical thinking, the necessity of custom software development, and low-level understanding of software infrastructure. We all now know that this is certainly not the case. If anything, critical thinking is required to adapt those libraries.

- In the 90s, Rapid Application Development Tools were expected to automate coding, diminishing the role of software engineers. We now know that this didn't turn out to be true. While there are things that were automated, the need to integrate all automated things is exponentially larger today than before.

- in 2010s, the rise of No-Code / Low-Code platforms to be in the forefront of articles, creating worries about the future of software engineers. However, those platforms created an ever bigger need for software engineers, to combine all platforms together and take advantage of the APIs offered by them so that they could automate many parts of our lives.

- And now, we have AI.

The pattern is clear: with every technological advancement, the role of the engineer evolves but remains indispensable. Past performance does not guarantee future earnings, but if history has taught us anything until today, is that Software Engineering, as a creative profession, remains very much adaptable to changes in the technological field - at least until now.

Takeaway

Over the last five years, corporations have made hasty decisions globally, driven partly by the workplace transformations caused by COVID-19. This includes over-hiring during the pandemic and over-firing in the push for AI-driven strategies. While GenAI is designed to assist, not replace, its misuse—fueled by greed, ignorance, and poor understanding of human potential—has led to layoffs and short-sighted decisions. The real issue isn’t GenAI but decision-makers relying on trends without fully understanding the technology.

Throughout history, decision-makers and trendsetters have made bold claims about technology's impact—sometimes with good reason. Yet, creative professions, including software development, have consistently adapted and thrived. Even in schools, one of the first lessons taught is the importance of adaptability. This mindset has enabled humans to elevate their craft, leveraging tools and programming languages that past generations could only dream about.

This pattern reflects a broader challenge: technology alone cannot solve systemic issues without thoughtful implementation and human oversight. As we explore what AI can and cannot do, it becomes clear that the true strength of technology lies in how it complements, rather than replaces, human ingenuity.

What an AI (still) cannot do

Software engineering is one of the most complex disciplines in today's world, and critical thinking is fundamental to its practice. Here's what an AI cannot do:

- Understand and refine business logic: AI cannot work with stakeholders (e.g., customers, managers, or corporations) to refine vague ideas into something concrete and feasible. This requires human communication and judgment.

- Develop technical strategies: While AI can assist with snippets of code, it cannot create a holistic technical plan or determine whether a project is even achievable within specific constraints.

- Identify and flag future risks: Spotting potential issues that could hinder implementation or delivery—like scalability problems, security concerns, or unanticipated dependencies—requires experience and foresight.

- Provide tailored, complete solutions: GenAI might help generate code or documentation, but it cannot deliver fully customized implementations for complex projects. Understanding and integrating the broader context of your system is a human job.

- Make architecture decisions: Choosing the best architecture for a specific use case demands a deep understanding of your unique requirements, trade-offs, and goals—something AI lacks.

- Perform root-cause analysis: Debugging a tricky issue, like one in a Kubernetes cluster, requires not only technical expertise but also a deep understanding of business logic, data flow, and critical thinking.

GenAI requires integration

The real challenge with GenAI today lies in its "integration problem"—a task that demands human ingenuity, foresight, judgment, good technical knowledge, and experience in systems design. "I have a good tool, how do I use it with the rest of my tools?"

In the context of company adoption, integrating it effectively into the company's systems and workflows requires significant effort. This effort includes developing tools to analyze data, link it with LLMs, extract meaningful results, and seamlessly integrate these outputs into existing systems. For example, integrating tools like Langchain (https://www.langchain.com/), ensuring AI security, and properly processing data for AI pipelines are critical components of this process, and those need to be developed by Software engineers.

There is an excellent interview with Daniel Miessler, creator of Fabric, who explains the above challenges perfectly when showcasing how his new framework works, and why he decided to create it.

A glimpse into the future

Based on the above, here is how I see the future - or hope to see it.

Software Engineers leveraging AI

Software engineering will continue to offer strong opportunities for the foreseeable future, especially in the Automation and Integration part. However, the field is already undergoing significant changes—not in the number of jobs available, but in the demands, daily workflows, and the nature of those roles.

GenAI has made learning significantly more accessible. For instance, picking up a new language like Python becomes much easier if you already know JavaScript or Java. The ability to ask open-ended questions and explore existing open-source code written by experienced developers is invaluable—a resource many aspiring developers would gladly pay for.

It also automates daily programming workflows like never before. For example, while I’m capable of writing an NginX proxy on my own, using a GenAI model to draft a template for 10 service routes allows me to focus on refining the output, cutting the time required to just 10% of what it would have taken before 2022. This is also how I first learned Terraform for most of my tasks, and now it's also helping me learn Golang the same way.

It's not that bad

Seeing how my daily workflow is being transformed to a new kind of routine (for which I have no name), I can only say that my Software Engineering profession has indeed been transformed and affected a lot.

In the future, software engineering will focus more on solving real-world issues and integrating complex systems into workflows. While these elements are central to today’s software engineering, the way they are approached will evolve significantly. Engineers will spend more time constructing complex software infrastructures and incorporating advanced data processing into their routines, rather spend time to optimizing their algorithms.

GenAI signifies only the start of the large-scale democratization of AI. Knowledge of machine learning and AI will become much more widespread. As these tools become more accessible, it’s likely that individual developers and even households will train and use their own small machine learning models. AI integration may become so common that it fades into the background, treated as a given rather than a novelty. Future software engineerings will be able to tune LLMs and Machiner Learning models for their needs, to gain a competitive edge on the rest. Companies will follow with company-specific AI implementations.

In the workplace, problems will shift to a more customer-centric approach. "What code do I need to write in my IDE?" will become "How can I combine systems X and Z to save time for the customer?" This shift will bring new challenges, including:

- A potential decline in innovative code, as faster development times will take precedence.

- The need for developers to spend more time learning and cultivating creativity, as over-reliance on AI for basic problem-solving could erode foundational skills.

AI is reshaping software engineering, but the key here is how engineers adapt and leverage these new tools to improve their daily routines and stimulate innovation.

It's not all good, either

AI reliance poses a significant risk: humans learn and innovate through solving problems. If GenAI takes over simpler tasks, developers may miss opportunities to build critical thinking and creativity. Those skills have always been essential for innovative and managing complex systems. Historically, creative and critical thinking have been the key strengths that set software engineers apart from Machines and Automation.

The above also risks creating overly complex infrastructures that few can understand and optimize. We’ve seen this in industries where automation has introduced inefficiencies or biases, requiring extensive human intervention to fix.

Creativity isn't just a skill - it's the Humanity's edge against machines. Losing it means that we risk being expendable and replaceable.

If we allow human creativity to diminish, we risk losing the unique edge that sets us apart from machines, making it easier for humans to become replaceable.

The solution isn’t to avoid AI but to use it wisely. Engineers must balance using AI’s strengths with cultivating the creativity and insight that make Humans what they are. The next step is clear: we must adapt to thrive alongside AI, not in competition with it.

Don't Compete with AI.

Over the years, I've come across skeptics who avoid using AI, particularly in software engineering. They argue that relying on AI feels like outsourcing their own job, making them more replaceable. Some believe AI will eventually replace humans entirely, and software engineering is no exception. Others embrace a "purist" philosophy—insisting that every aspect of computer science and operations must remain human-driven.

But here's the reality: AI is already here, and it's changing the way software engineers work. Countless professionals use GenAI to speed up learning and streamline daily tasks. Ignoring it is like ignoring a new tool that’s redefining the field. To stay relevant, adaptation is not optional - it's essential.

AI is not the enemy...

Any complaint I have heard isn’t about AI itself—it’s about how people use it, especially when decisions are made without fully understanding its capabilities or limitations. We've seen what happens in the corporate world: massive investments, widespread hysteria, layoffs, and wasted billions. While individual software engineers can’t change corporate strategies, understanding where to focus your efforts can make all the difference.

AI and its derivatives are here to stay. As software engineers, we need to integrate these tools into our workflows—not as replacements for our skills, but as enhancements.

Here’s the truth: if you don’t want to be replaced by a machine, don’t act like one.

Fearing AI and trying to compete with it sets you up for failure. Racing to be faster or more efficient than a machine is a losing game—and the quickest path to obsolescence. Instead, reframe the narrative: use AI as a tool, not a competitor. Treat it as a powerful assistant or an advanced search engine, not as something that takes over your job.

The tasks requiring critical thinking, creativity, and human insight remain ours to own. Despite the corporate world’s obsession with automation, these uniquely human skills are more essential now than ever.

Keep Learning and Adapting

- Embrace new tools like GenAI to learn faster and automate mundane tasks. Use AI to ask better questions: “How can I learn X? What’s the best way to approach Y?” Then, take the time you save and invest it in learning new skills.

- Use GenAI Strategically. Incorporate AI into your workflow as a productivity booster. Automate repetitive tasks, but reserve your energy and focus for work that requires critical thinking and problem-solving.

- Double Down on Your Humanity. Read books, study human behavior, and dive into what keeps people up at night. The problems worth solving are often deeply human—and understanding them is key to staying indispensable.

- Invest in Your Brain. Your brain is the most intelligent and adaptable tool you’ll ever have. Neuroscience shows that humans can learn and adapt in ways even the most advanced supercomputers struggle to replicate. In software engineering—and any other knowledge-based field—this edge is priceless.

- Invest in lifelong learning. Stay curious and keep growing. Learn new programming languages, explore advanced concepts, or dive into AI integration strategies. The ability to learn and adapt is your most valuable asset, and lifelong learning has always been a cornerstone for software engineers, no matter the circumstances.

Conclusion

What makes us human isn’t just the speed of delivery or learning but our unique ability to apply knowledge, make critical decisions, and imagine solutions to unsolved problems. Stanislas Dehaene, a renowned French neuroscientist, highlights this in his book How We Learn (an excellent read), emphasizing the unmatched capability of the human mind to process and apply knowledge. This reinforces the idea that the best computing machine in the universe is still the human mind—a fact often overlooked in corporate decision-making. But that’s a discussion for another time.

GenAI and AI in general are here to stay. Use it for what they are—tools to enhance your capabilities, not replace them. Understand how they works and how to make them work for you, automating what can be automated while doubling down on what makes you human.

The best way to stay relevant isn’t to fear AI but to leverage it. Focus on what makes you irreplaceable, and let AI handle the rest.