AI-Ready Infrastructure: The Composable IT Approach with Kubernetes Operators

Introduction

At TM Forum DTW Ignite 2025 in Copenhagen — one of the most significant global events for the telecommunications industry — I shared how Vodafone’s CELL platform and ODA Canvas are redefining what’s possible for telco IT through automation and standardization.

CELL was designed to modernize the backend systems that have been at the core of Vodafone IT business operations for more approximately two decades now, supporting customer-centric operations. There are articles written about CELL, as well as a launch event aiming at providing insights as to its design and implementation.

By reading those articles one can grasp what CELL really is.

Behind CELL, however, lies a bigger “why”: a vision aligned with where the entire IT industry is headed — toward Composable IT, with Kubernetes at the Core.

Composable IT

To understand what is composable IT, let's first examine the problems it tackles.

Big companies, big problems.

When a company reaches a certain size threshold, there is a certain success that accompanies its operations, that has allowed it to grow until then. Success is great - its hunt is what drives companies to innovate.

What is less discussed among technical circles, however, is what happens after the company becomes successful. Usually, success - and all the good things that it means - is accompanied by tons of problems. Bureaucracy, and difficulty to innovate are parts of those problems. As customer bases grow, so are internal teams.

No matter how big both become, one thing is certain: Business continuity is essential. The company can have all the issues they want, any vision they want, as long as this does not disrupt the customer's expectations that made the company successful in the first place.

At this critical point, companies must ask:

- How do we modernize underlying service infrastructure without disrupting live services?

- How do we adopt new technologies gradually without risky, large-scale releases?

- How do we adopt GenAI to modernize our operations without disrupting our existing business flow?

These same challenges make it nearly impossible to adopt AI-driven operations, since AI requires clean, consistent, and automated inputs to function effectively.

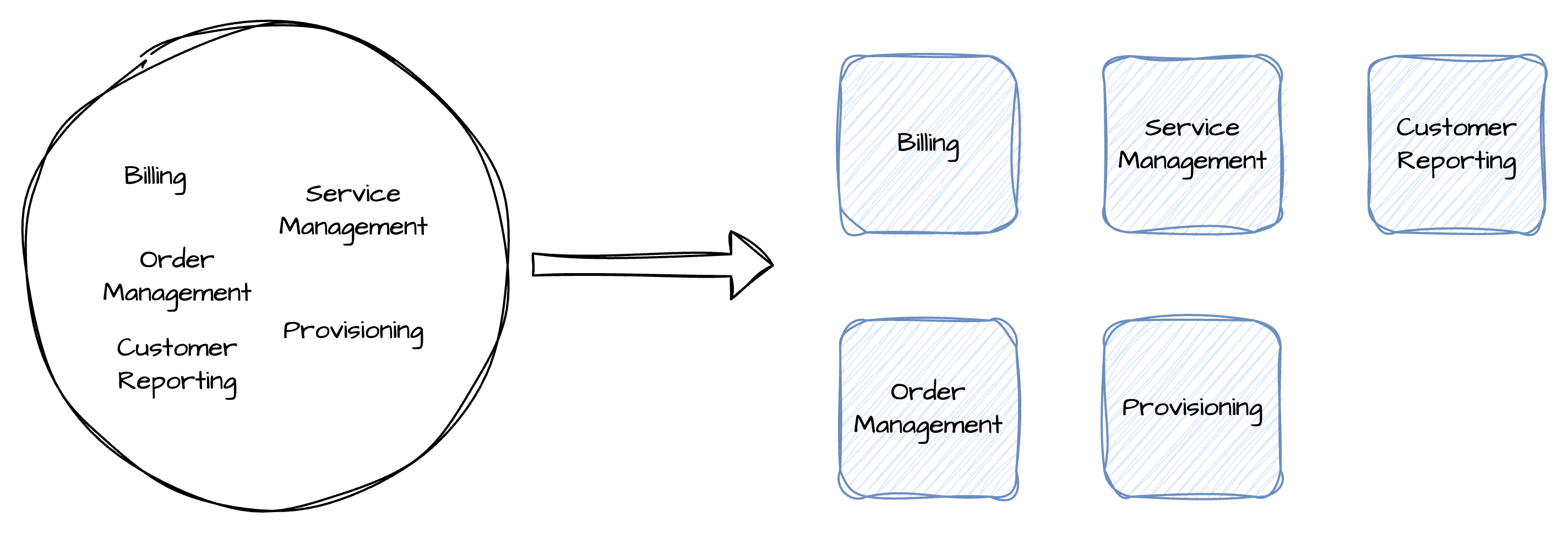

This is when companies begin "breaking down the monolith," redesigning their infrastructure — often alongside organizational changes — with smaller, more autonomous components in mind, creating the structural foundation that naturally leads into a Composable IT approach.

Moving on to Composable IT

Modern Composable IT addresses these challenges by breaking monolithic infrastructures into smaller, autonomous components that are:

- Independently deployed

- Environment-agnostic

- Cloud-native

- Microservices-based

These headless components communicate through APIs, most often REST. This is where microservices and the most well-known orchestrator, Kubernetes, come into play — and what many people think of when they hear “Composable IT.” Over the last few years, Kubernetes adoption has surged, with major players not only using it but also contributing to its core.

For years, this approach was sufficient to meet the needs of both small and large enterprises, with Kubernetes serving as the “business unit orchestrator” — a platform to deploy business applications, and little more.

But today, many companies are aiming to move toward AI‑driven operations — yet this shift is difficult because much of their existing infrastructure and processes have not been optimized or automated to support it.

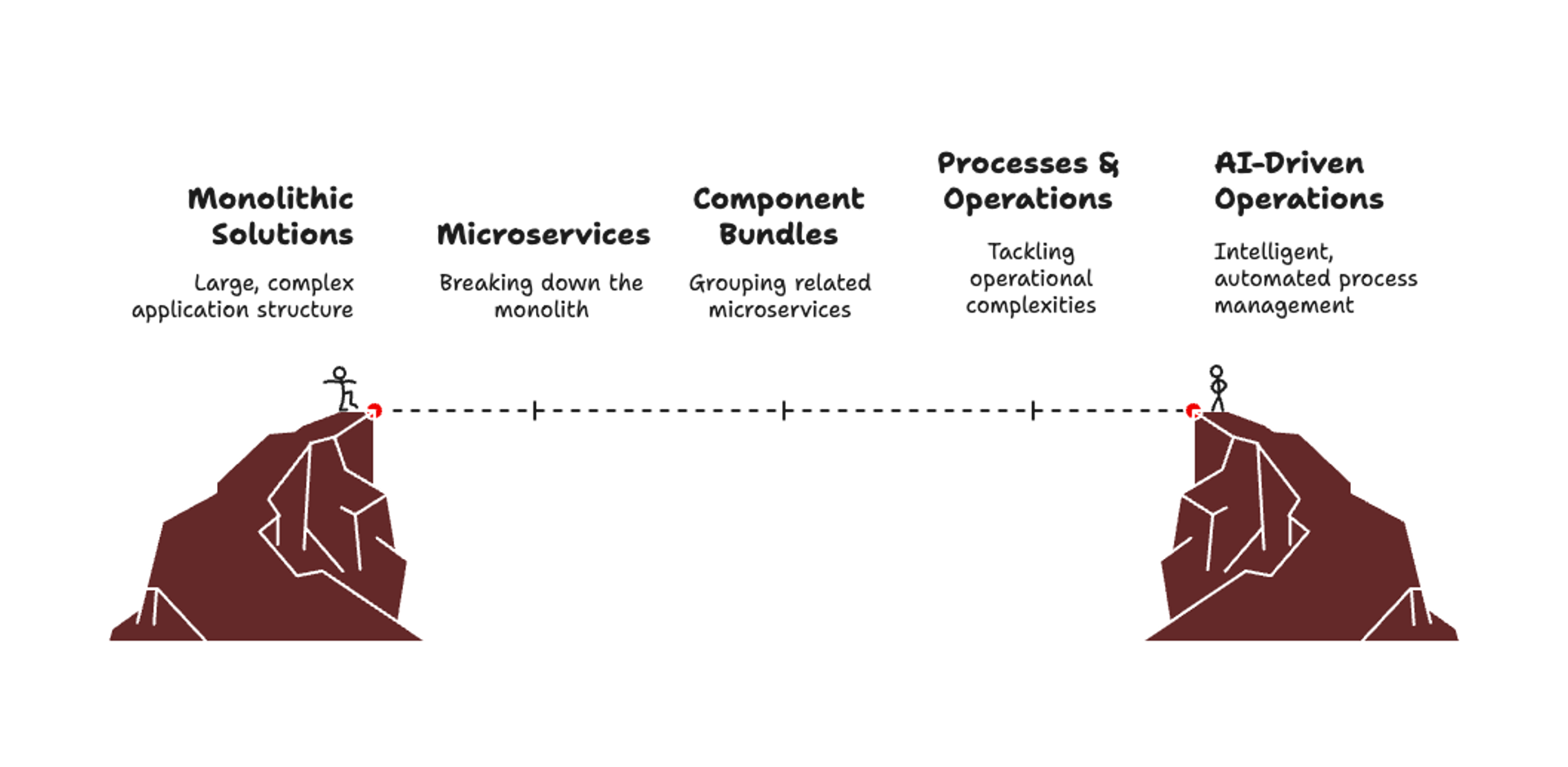

In a true Composable IT approach, AI is built upon the infrastructure and foundation created by optimizing and automating company processes. If we try to formalize the journey toward becoming an AI-driven company, it might look like this:

- Break the entire business model into autonomous components — adopting microservices can help.

- Formalize processes, then automate them.

- Orchestrate the company using AI, leveraging the automation mechanisms already in place.

Therefore, transitioning to microservices should be a stepping stone to a broader transformation, not the end goal. Without orchestration and automation as a foundation, complexity soon returns in a new form, making AI‑driven ambitions even harder to achieve.

"Microservices Are Not Enough"

In fact, they have enough problems on their own.

A paradox of Kubernetes is that while it greatly simplifies running complex distributed systems, it introduces its own complexity. Many enterprises find Kubernetes “powerful yet complex” as they scale usage .

Imagine a fleet of over 220 services—as seen in Vodafone’s DXL platform, where my former team now runs more than 220 microservices in production —and suddenly a simple global policy change, such as enforcing JWT validation on every endpoint, can balloon into a monumental manual effort.

Relying on an API gateway’s UI or brittle CLI scripts to update each route is slow, error-prone, and risky. Inconsistent service naming, version drift, and varied environments create a complex setup where one misstep can cause downtime or expose data. Even with scripts, teams often end up back in manual remediation loops.

The main issue with this approach is the heavy reliance on manual steps and policy applications across hundreds of distinct routes — a tedious, time‑consuming, and error‑prone process.

It doesn’t have to be this way. The road to Composable IT involves standardizing and automating such processes, and in the microservices world, Kubernetes Operators are a powerful way to achieve it.

Many companies are realizing that adopting microservices alone is not enough to achieve the agility, environmental portability, and development speed required to transition to AI‑driven operations.

Composable IT's core: Automation & Kubernetes Operators

At the core of Composable IT's adoption is Process Optimization. And this is where Kubernetes Operators excel at.

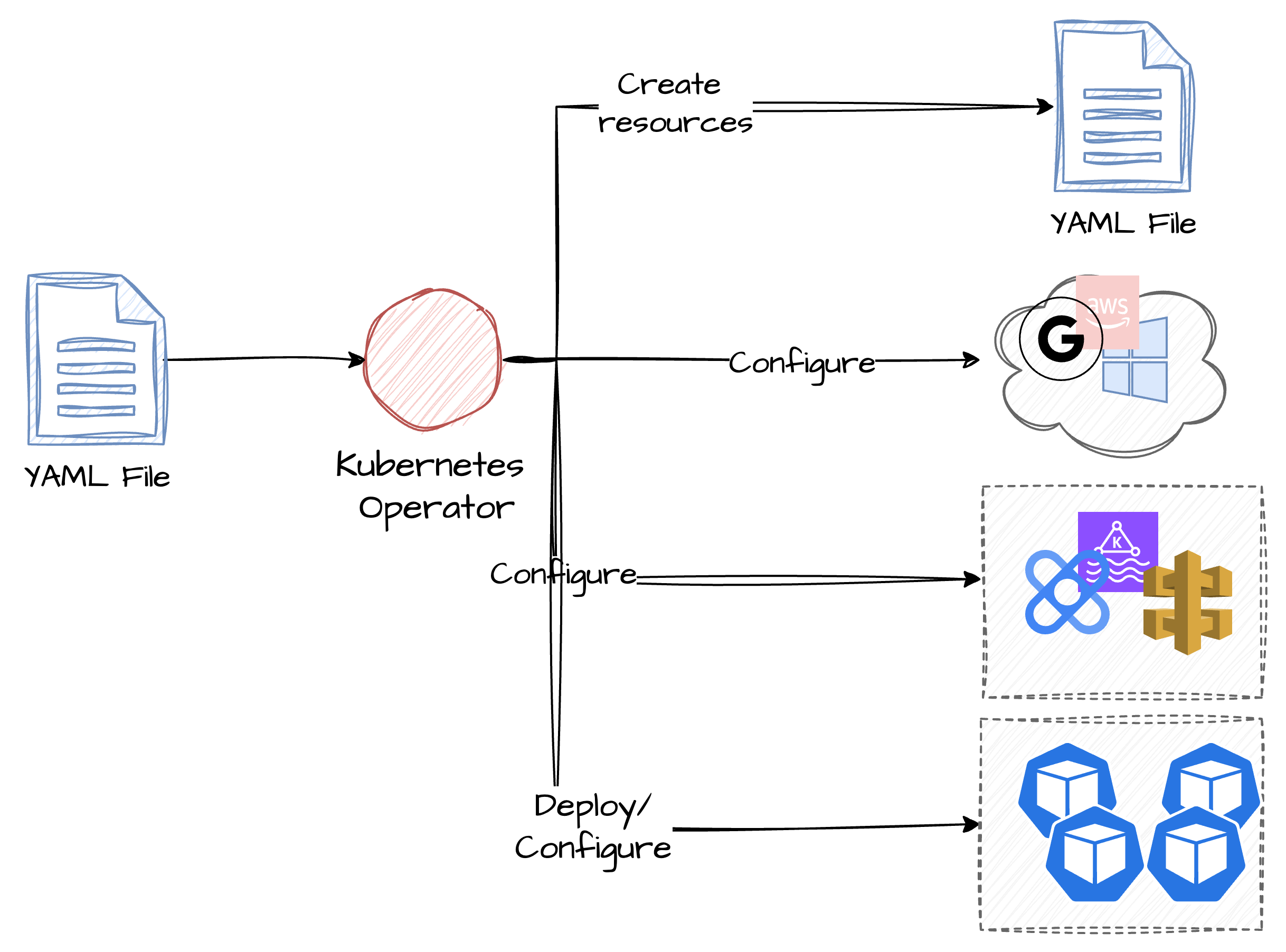

Kubernetes Operators extend the Kubernetes API by introducing Custom Resource Definitions (CRDs) and controller logic to automate operational tasks. An Operator watches for changes to custom resources—representing application-specific configurations or lifecycle events—and reconciles the actual cluster state to match the declared intent.

This paradigm enables teams to package best-practice operational knowledge (e.g., backups, scaling, failover) into reusable software, so day‑2 activities occur automatically without manual intervention.

This Kubernetes capability has been taken advantage of since its inception by a multitude of companies. For example:

- Rabbit MQ provides a Kubernetes Operator to deploy and manage RabbitMQ Clusters.

- The Journey to build Bloomberg’s ML Inference Platform using KServe (formerly KFServing) — describes how Bloomberg leverages KServe's Kubernetes Operator for production ML serving

- QuestDB Cloud — case study on building a custom Operator to handle automated provisioning, upgrades, backups, and failover for their SaaS environment

- Airbnb uses Kubernetes Operators to manage Flink infrastructures.

Kubernetes Operators are a well-established technology now - so much so that there is a Hub for operators at OperatorHub.io, which is the equivalent of Docker Hub - but for Kubernetes Operator.

Standardising Telecommunication Automation

Kubernetes Operators can do awesome things in automation. They can transform the technical processes and the deployment model of a company and bring the entire organization in a new era.

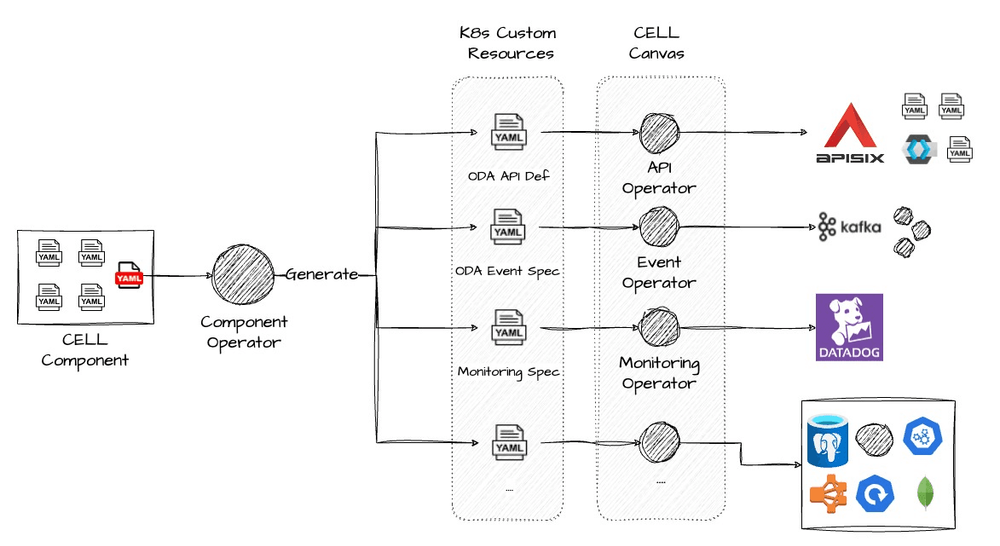

Recognizing the opportunities provided by Kubernetes Operators, telecommunications engineers developed a standardized automation framework— the ODA Canvas. As part of the broader Open Digital Architecture (ODA), the Canvas defines a common set of Custom Resources and controller patterns to capture deployment and runtime intent across large-scale Kubernetes clusters. By baking TM Forum best practices into CRD schemas and operator workflows, the ODA Canvas streamlines cluster provisioning, policy enforcement, and lifecycle management—bringing consistency and repeatability to telecom deployments.

CELL - the platform I designed and worked on for the past 3 years in Vodafone Greece - has adopted ODA, and ODA Canvas specifically to power their automation infrastructure.

Canvas in Vodafone: CELL

The Canvas is what allows Vodafone Greece to move from vision to action, with CELL already delivering this in production. What differentiates CELL from the rest of our clusters is that it embeds automation as a first class citizen.

As an example of the power of automation with Kubernetes Operators, in traditional setups exposing an API could take up to three weeks of cross-team coordination. In CELL, Kubernetes Operators handle the entire process in just three seconds.

What we have essentially done is write our documentation in Software rather than in Confluence. We have standardized our procedures, allowing them to be expressed in Code, ultimately leading to Automation. Our Kubernetes operators read a manifest of the deployed components to understand their environmental requirements, and upon deployment they work to bring the entire infrastructure to the desired state.

This is an amalgamation of intent-driven automation, allowing us not only to accelerate development but also to create applications that can seamlessly adapt to their environment, without the extensive planning and overhead often associated with traditional microservices clusters.

Industry Adoption & Trends

The shift toward intent-driven architectures is industry-wide:

- Cloud-Native Maturity: In the 2024 CNCF Annual Survey, 89% of organizations reported using Kubernetes in production, and one-quarter said nearly all their development and deployment workflows are cloud-native, signaling readiness for higher-order abstractions like Operators

- GitOps & Automation: According to the same CNCF survey, 77% of respondents said much or nearly all of their deployment practices and tools adhere to GitOps principles—an automation approach that pairs naturally with the reconciliation loops of Operators.

- Operator Ecosystem Growth: OperatorHub.io now hosts hundreds of Operators covering databases, messaging systems, service meshes, and more, up from a handful just a few years ago (operatorhub.io). Examples include the DataStax Kubernetes Operator for Apache Cassandra (released in May 2025) and CloudNativePG (6 days ago) (operatorhub.io).

- Enterprise Case Studies: Beyond telecom, companies like Bloomberg (ML inference), Airbnb (Flink), and RabbitMQ (clustering and failover) have published case studies on how Operators cut provisioning times from days to minutes and improved reliability by 30–50%.

Operators are no longer niche — they’re becoming the standard for codifying infrastructure logic and ensuring platform consistency.

Why this matters now

We're entering a phase where infrastructure is no longer managed - it's self-managing.

Kubernetes Operators allow business and technical teams to describe the desired outcome, and let the platform decide which path to follow towards the goal. Developers can now write their policies in code, not in Confluence pages.

The benefits will compound over time: faster innovation cycles, fewer outages, less error-prone procedures, lower cost-to-serve, and the freedom to adopt new technologies without destabilizing existing services.

From Clusters To Control Planes

The industry has embraced Kubernetes as the go‑to platform for orchestrating microservices that power business applications. But its future lies equally — if not more — in operational excellence.

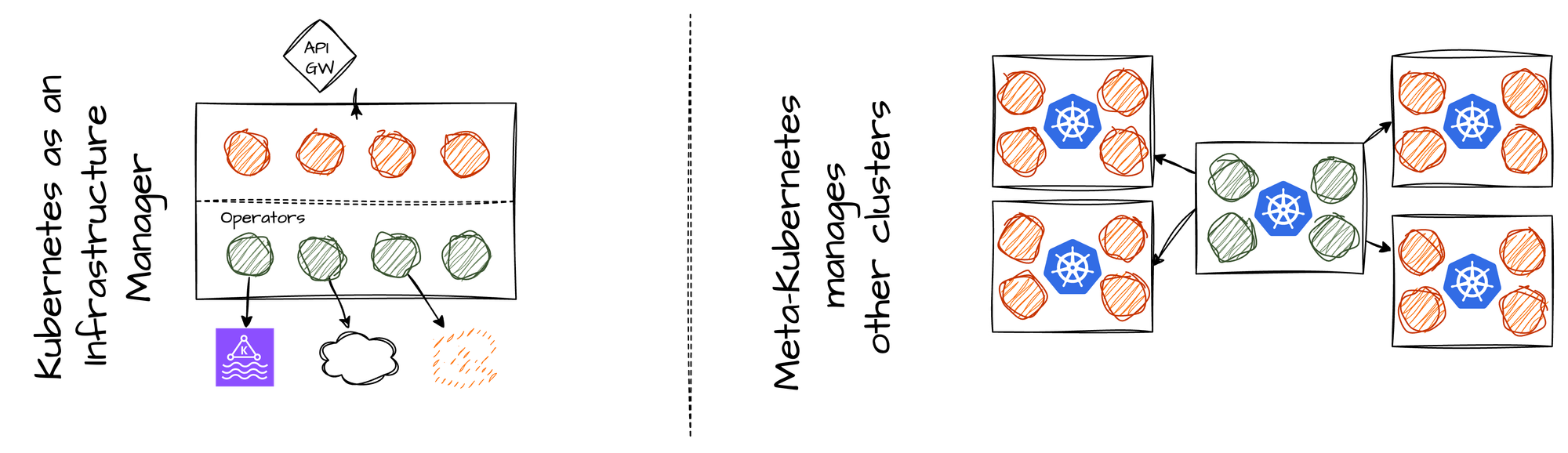

With continuous declarative infrastructure automation, Kubernetes can define and manage far more than workloads: network connectivity, platform services, entire business systems, ticketing workflows — all represented as CRDs and governed by declarative intent.

It’s not far‑fetched to imagine Kubernetes Operators supplanting policy manuals and operational runbooks entirely, enabling companies to use a single cluster to spawn, configure, and govern additional clusters — effectively running their enterprise as code.

Conclusion

Current industry trends speak about "The Return To The Monolith" or about companies ditching Kubernetes, some in favor of monoliths. Today, however, Kubernetes is much more than a digital environment to put your business applications. It enables intent-based company orchestration, infrastructure automation, and more.

My work in CELL was focused on proving this point. Drawing on my experience with the ODA Canvas, Kubernetes Operators, and observations of the challenges faced by large hyperscalers, I’ve found that the journey toward Composable IT delivers substantial benefits — simplifying operations, enabling organizations to scale more effectively, and paving the way for GenAI adoption.